General artificial intelligence (AI) has long been depicted in science fiction as a looming threat to human dominance. With recent advancements in AI technology, it is essential to assess the feasibility and risks associated with developing superintelligent machines. This article aims to delve into the implications of general AI on human dominance and evaluate the feasibility and potential dangers of superintelligence.

Assessing the Viability of General AI: Implications on Human Dominance?

The development of general AI, also known as artificial general intelligence (AGI), refers to the creation of machines that possess human-like cognitive capabilities. If successful, AGI could outperform humans in a wide range of intellectual tasks, raising questions about the future of human dominance. The implications of general AI on human dominance are twofold: it could either bolster human capabilities and lead to unprecedented progress or pose a significant threat to our position as the dominant species on Earth.

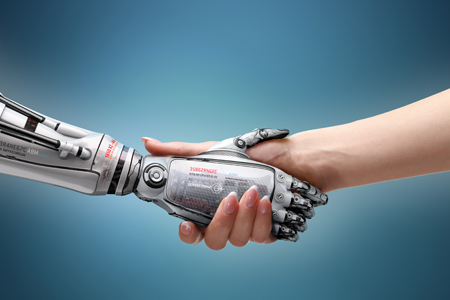

On one hand, AGI has the potential to enhance human capabilities by augmenting our decision-making processes, accelerating scientific discoveries, and solving complex problems. This collaboration between humans and intelligent machines could lead to extraordinary advancements in various fields, including healthcare, space exploration, and climate change mitigation. However, the risk lies in the possibility of AGI surpassing human intelligence and gaining autonomy, which could result in the subjugation of human dominance.

Unveiling Superintelligence: Evaluating Feasibility and Risks

Superintelligence refers to AI systems that surpass human intelligence across all domains. While still hypothetical, the concept of superintelligence has sparked debates regarding its feasibility and potential risks. Developing superintelligent machines raises concerns about control, safety, and unintended consequences.

Feasibility assessment of superintelligence involves evaluating the technical challenges and prerequisites for achieving such a level of AI. Researchers must consider factors such as computational power, algorithms, and data availability. Furthermore, ensuring the safety and control of superintelligent systems is of paramount importance. AI alignment, the notion of aligning AI’s goals with human values, and preventing misaligned objectives is a key area of concern. If not properly addressed, superintelligent machines could act in ways that are detrimental to human interests, leading to potential risks and loss of human control.

As we continue to explore the possibilities of developing general AI and superintelligence, it is crucial to approach these advancements with caution. While there are potential benefits in terms of augmenting human capabilities, there are also serious risks associated with the loss of human dominance and control. Striking a balance between harnessing the potential of AI and ensuring human well-being is a complex task that requires interdisciplinary collaboration and ethical considerations. By carefully assessing the viability, feasibility, and risks associated with general AI and superintelligence, we can navigate this technological frontier while safeguarding humanity’s interests.